Platform Guide

Complete reference guide for the Teckel AI system. This guide covers every platform feature with screenshots and examples.

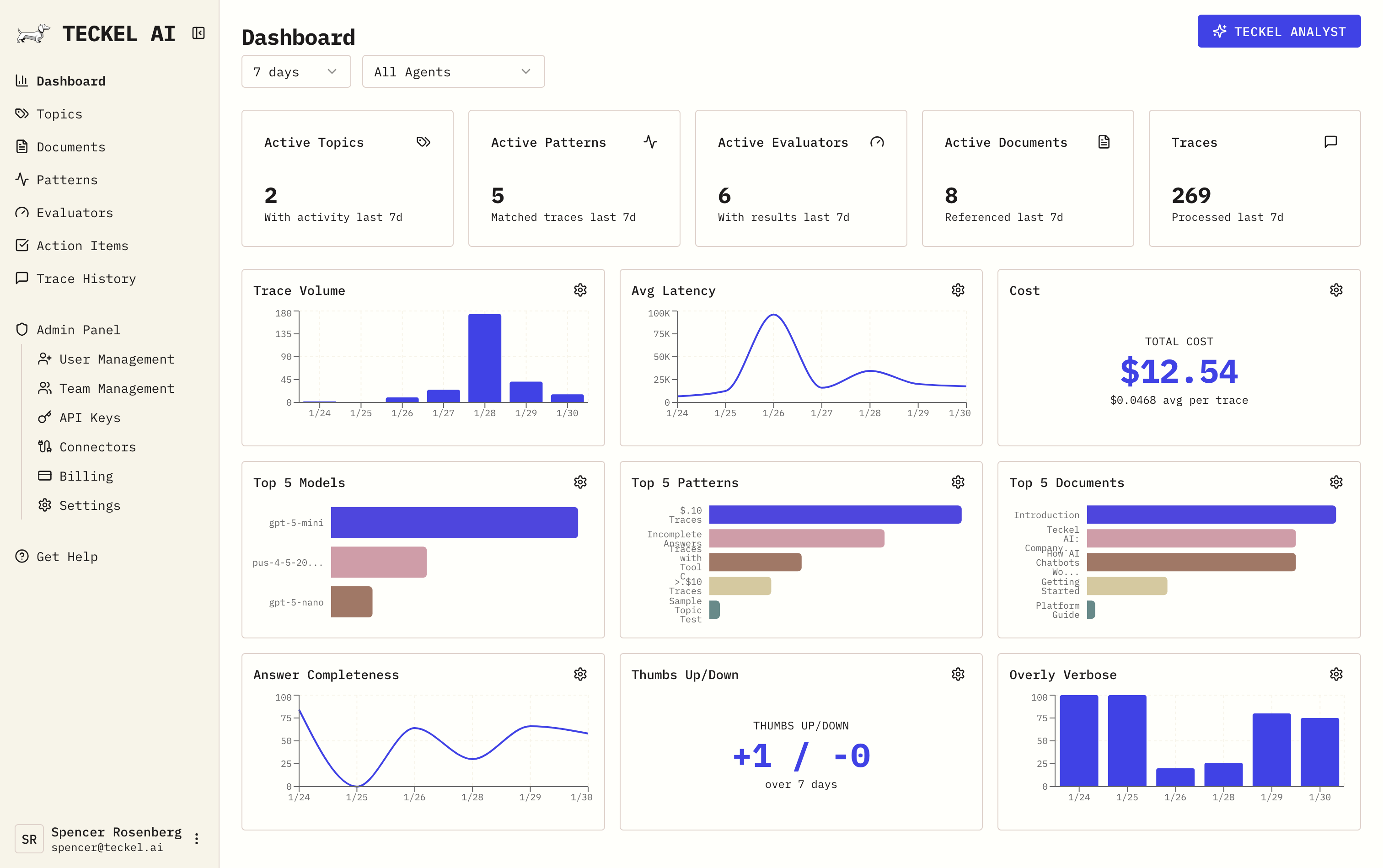

Dashboard

Our main dashboard provides a comprehensive overview of all of your agents and AI system's health through customizable charts.

Customizable charts

- Click the settings icon to configure and customize analytics you care about

- Metrics include: custom evaluator scores, pattern counts, cost, latency, tokens, and more

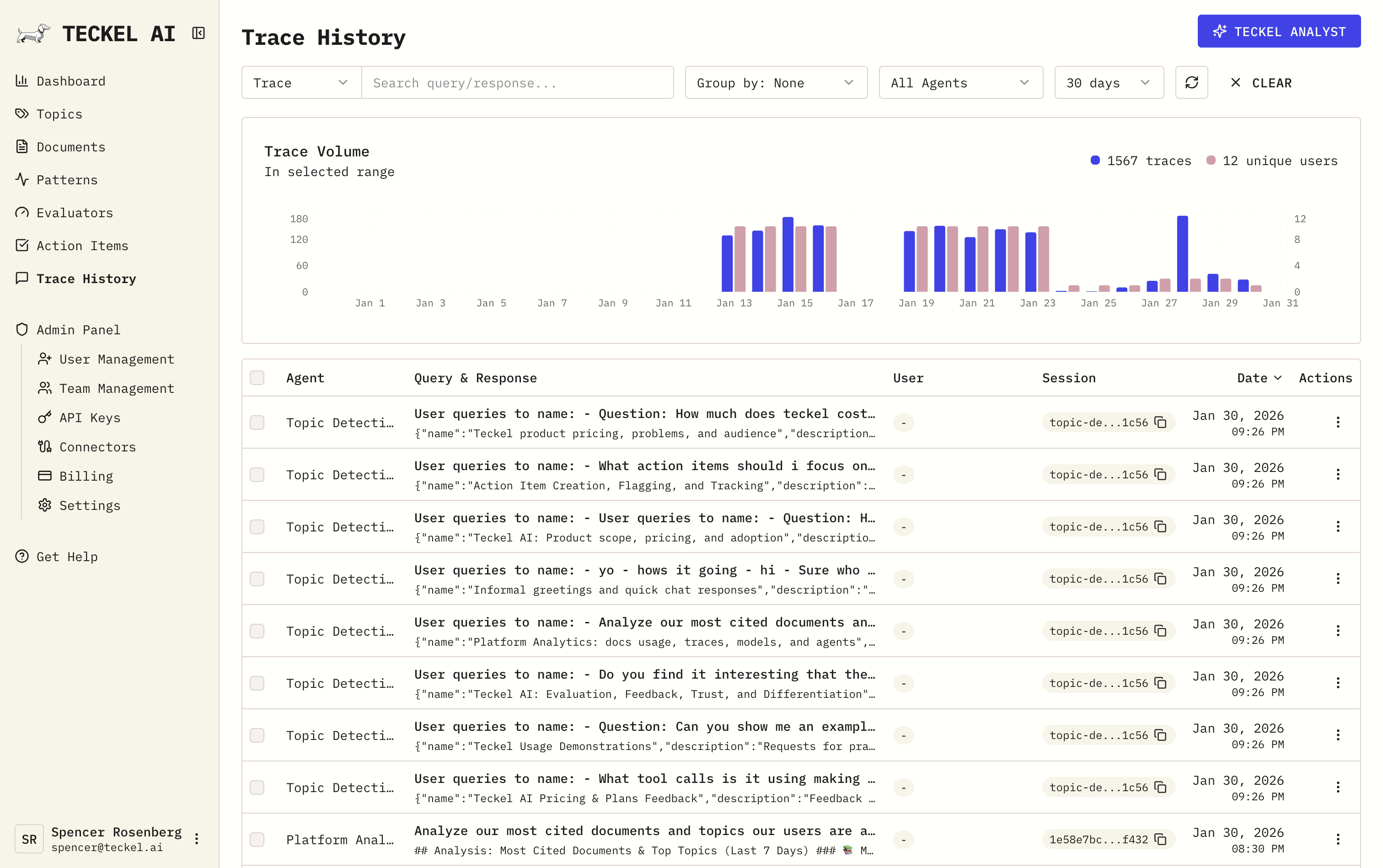

Traces

Every interaction all of your agents handle.

Filtering options

- Semantic search: Search by meaning ("complaints about shipping")

- Text filters: Query or response contains specific text

- Score filters: Evaluator scores above/below thresholds

- Topic filters: Traces classified under semantic topic areas

- Document filters: Similarly retrieved information via RAG

- Group by category: Ability to see users, topics, conversations and more in easy to analyze groups

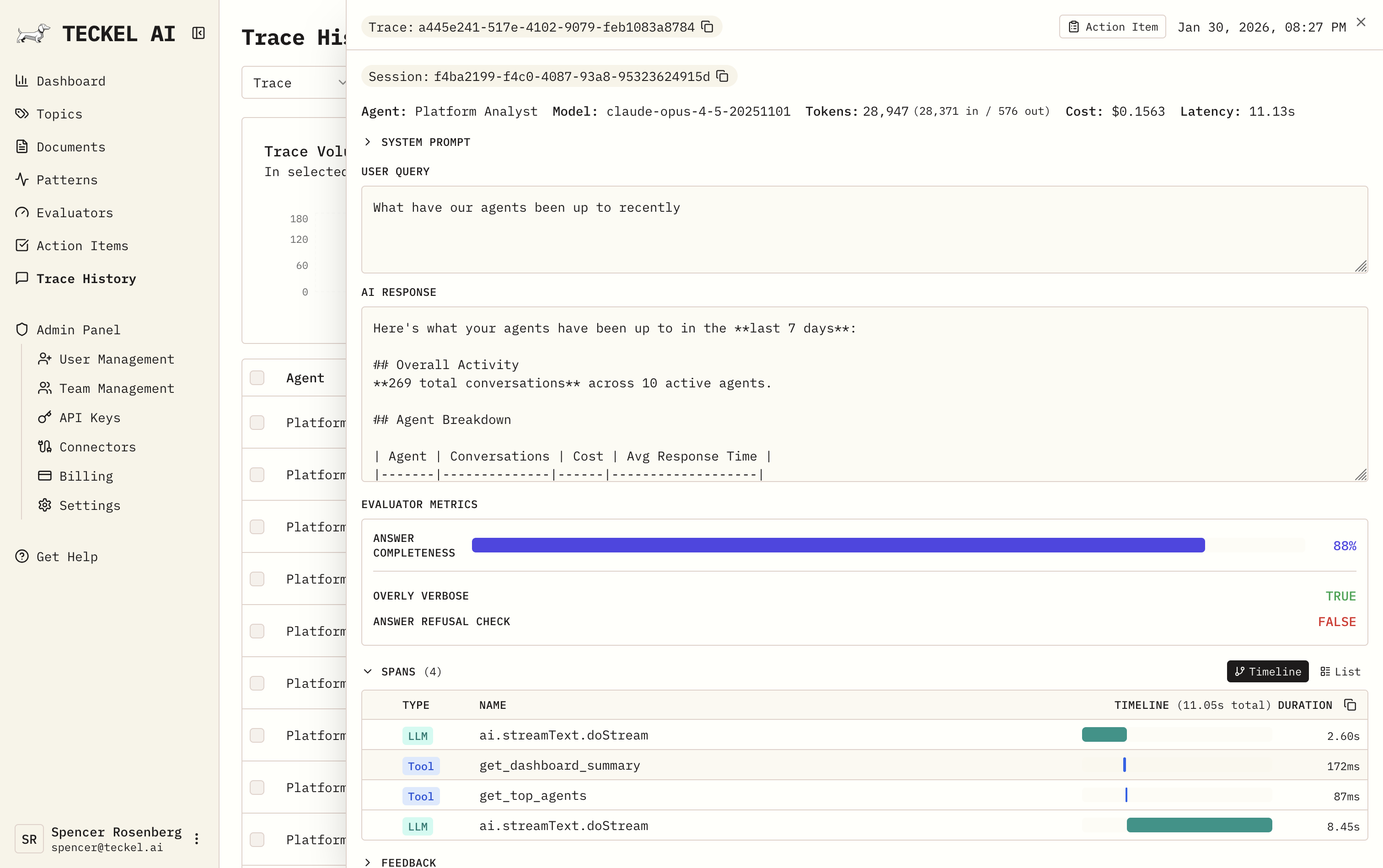

Trace detail

Click any trace to see:

- Full query, response, and all spans including tool calls and more

- All custom evaluator scores including scoring on documents via RAG

- Retrieved document chunks, semantic topic area, and agents involved

- Cost, latency, tokens, and more

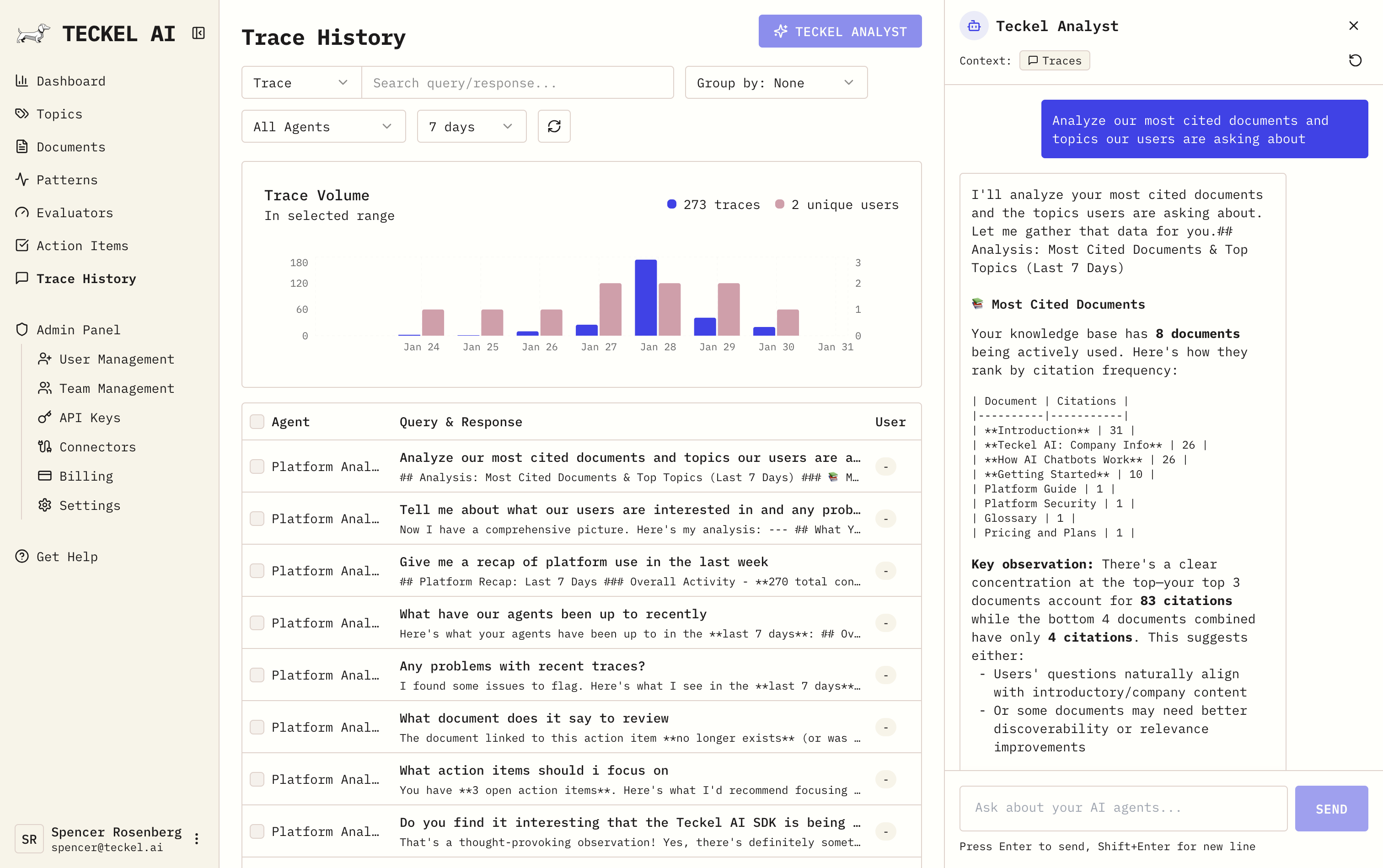

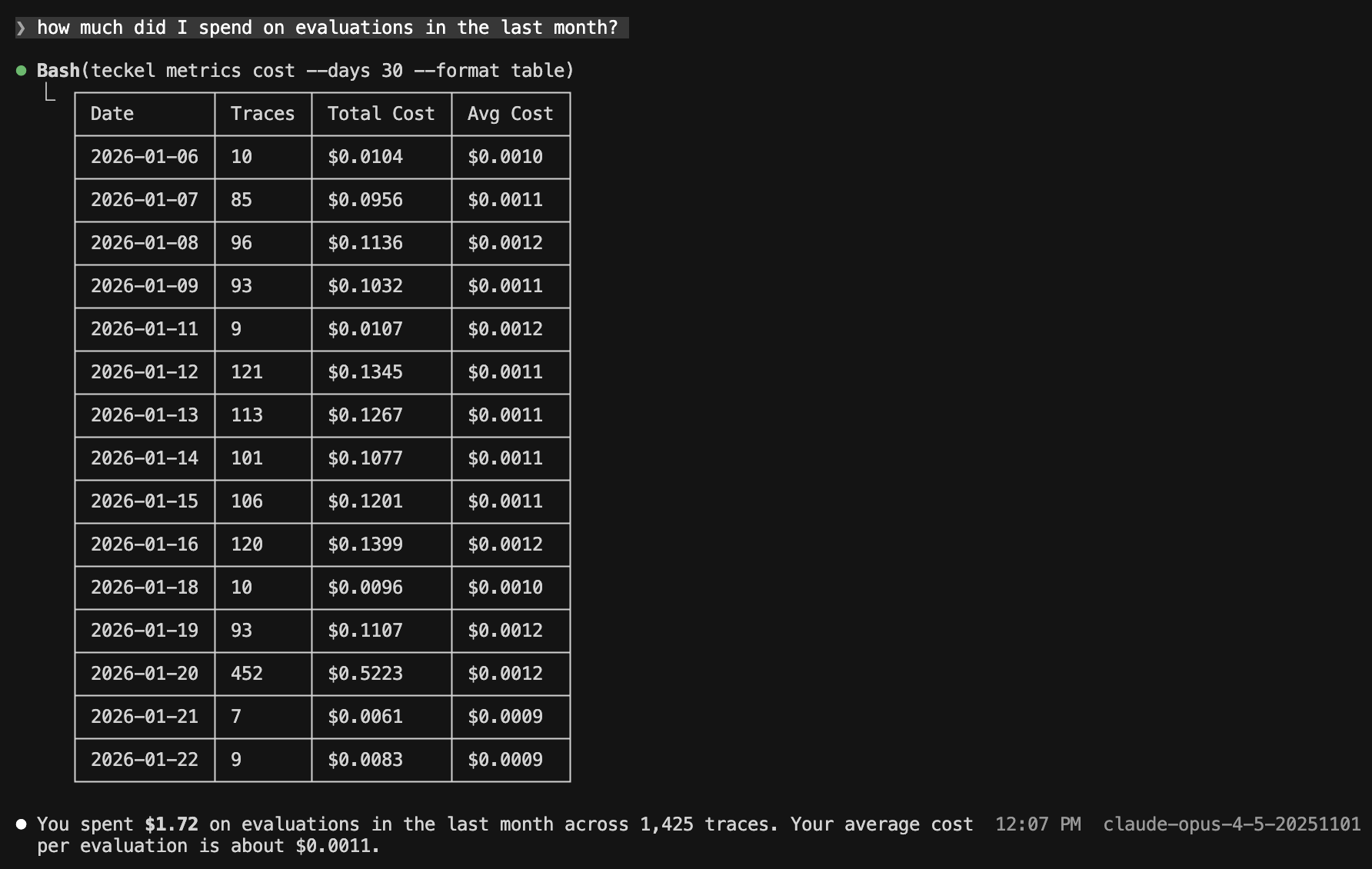

Teckel Analyst

Teckel includes a powerful built-in AI analyst that has secure, read-only access to your dashboard. Think of it like having a senior data scientist on-call who can use the platform in the same way you can, and can help with summarizing usage (ask it how much each agent cost to run over the past month, and find any inefficient tool calls leading to high costs) or identifying issues (ask it to identify any knowledge gaps across topics of interest, find out why users are giving certain responses like “thumbs down” feedback, and tell it to flag and write down an action item for your team to review later).

Today the Teckel Analyst is available in platform, with CLI functionality coming soon. You’ll be able to pull trace logs directly into your Codex or Claude Code instance to debug and fix your agents on the spot.

What it can do:

- Find issues fast: Ask "What are the most common failure patterns this week?" or "Show me traces where accuracy dropped below 0.5"

- Spot usage patterns: "Which topics have the highest volume on weekends?" or "What's the average cost per trace for billing questions?"

- Summarize anything: "Summarize the top 5 issues affecting customer satisfaction" or "Give me a breakdown of evaluator scores by topic"

- Investigate anomalies: "Why did latency spike yesterday at 3pm?" or "What changed in the last 24 hours?"

How to use it:

Open the analyst panel from any page and ask questions in natural language. The analyst can query traces, evaluator results, topics, patterns, documents, and system analytics- anything you could find yourself by navigating the dashboard.

Security: The Teckel Analyst has the same permissions as your account itself, fetching data using the same process that feeds the dashboard itself. It can only read information, never modify it.

Example prompts:

- "What percentage of billing-related traces have completeness scores below 0.7?"

- "List the top 10 documents by retrieval frequency this month"

- "Compare average accuracy scores between last week and this week"

- "Find traces where users asked about refunds but got low satisfaction scores"

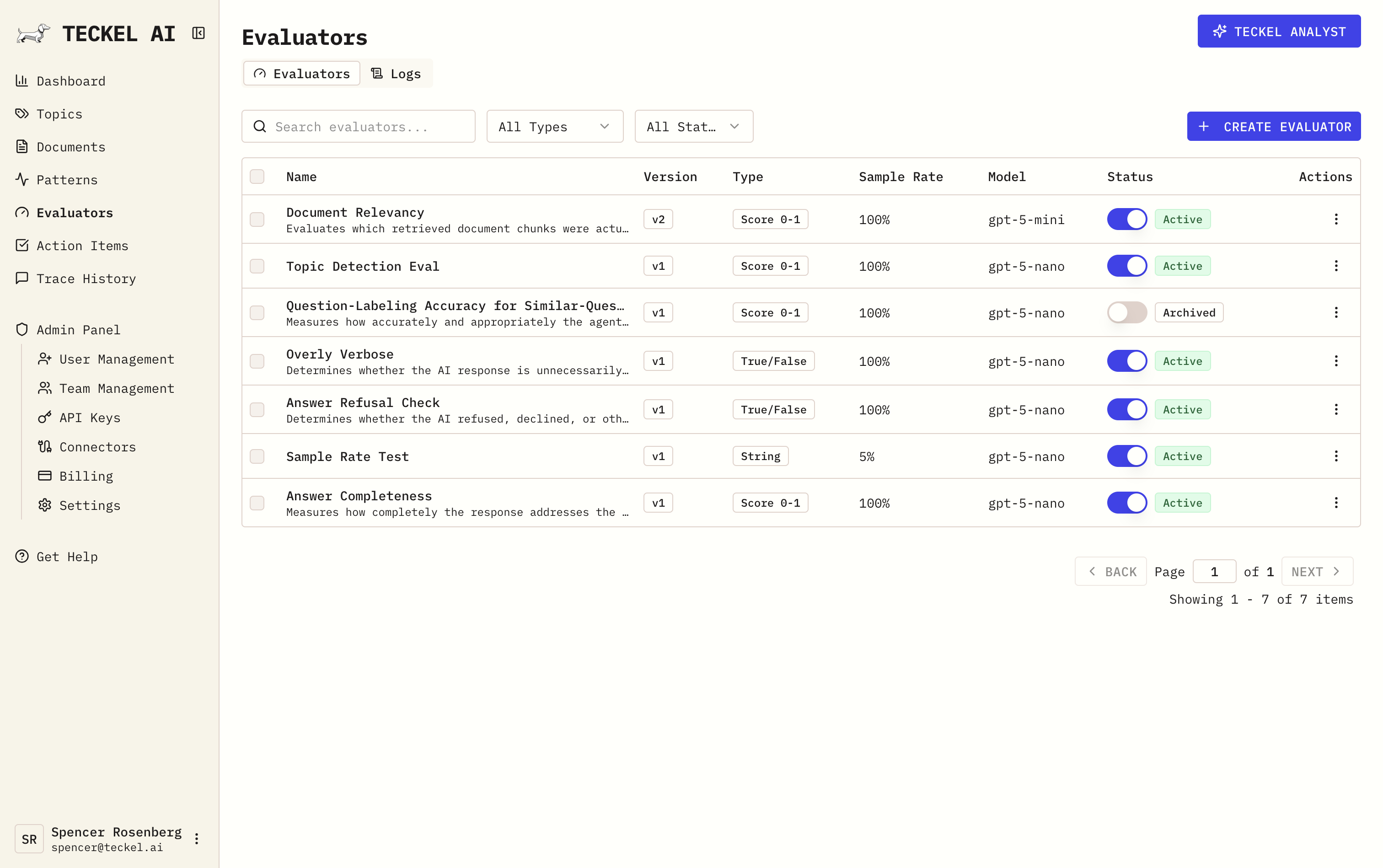

Evaluators

Customizable LLM-as-a-Judge evaluations you define to measure response quality, designed to cheaply and automatically run on production traces empowering you to find and fix the problems that matter to you. Uniquely Teckel AI offers RAGAS inspired document-chunk analysis in platform, allowing you to grade how well your RAG system retrieval is working.

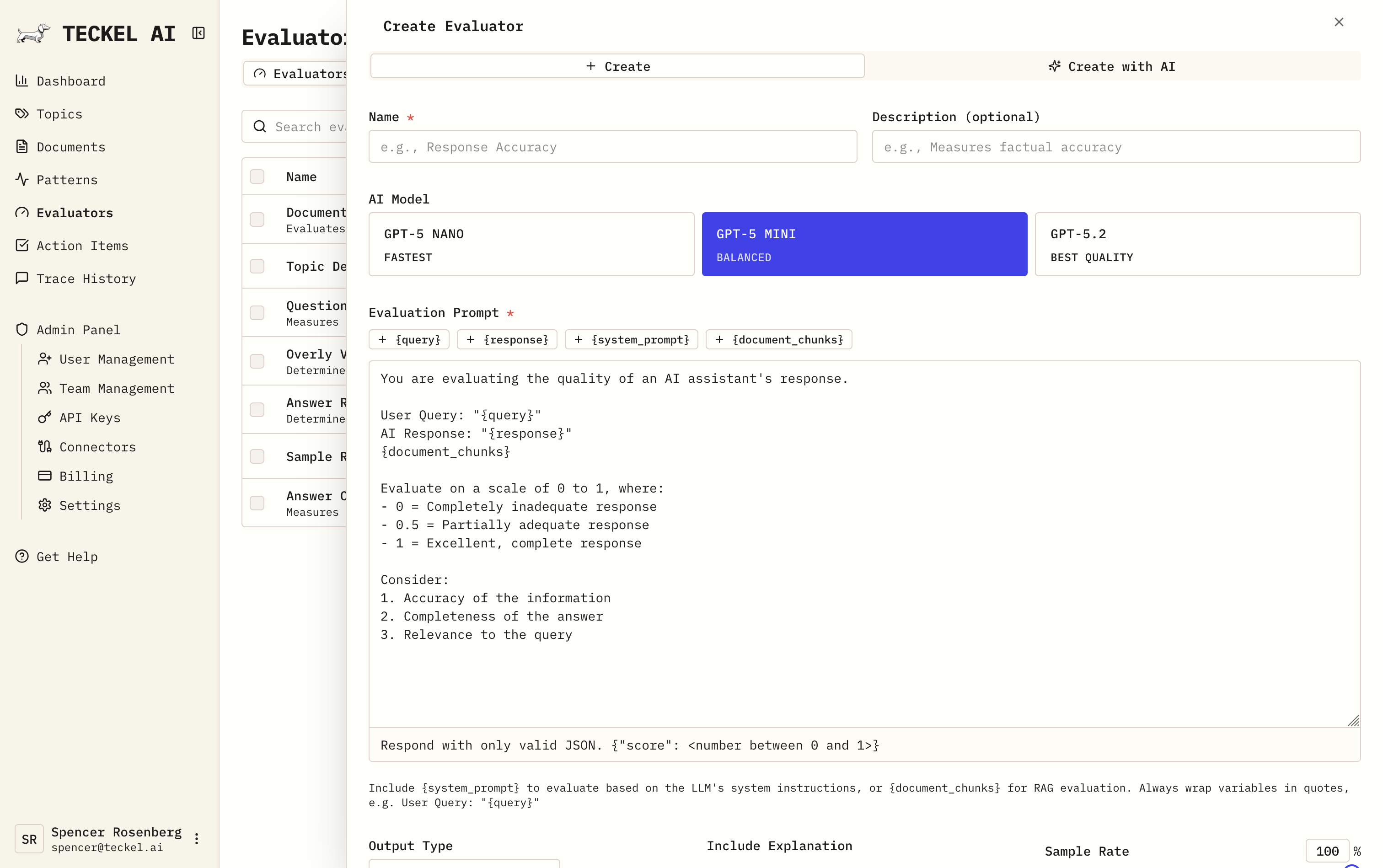

Creating Evaluators

Each evaluator needs:

- Name: "Completeness", "Tone", "Contains Disclaimer", etc.

- Prompt template: Instructions with variables

- Output type: Numeric (0-1), boolean, or string

- Sample rate: 100% for critical metrics, lower for cost control

- Model: Which LLM runs the evaluation

Template variables:

{query}- The user's question{response}- The AI's answer{system_prompt}- Your agent’s system prompt{document_chunks}- RAG document chunks

Example prompt (completeness):

Evaluate how completely this response answers the user's question.

User question: {query}

AI response: {response}

Rate from 0 to 1:

- 1.0 = Fully answers with all relevant details

- 0.7-0.9 = Mostly complete with minor gaps

- 0.4-0.6 = Partially addresses the question

- 0.1-0.3 = Barely relevant

- 0 = Does not address the question

Return only the numeric score.

Natural language creation: Describe what you want to track and let Teckel generate the prompt for you to revise.

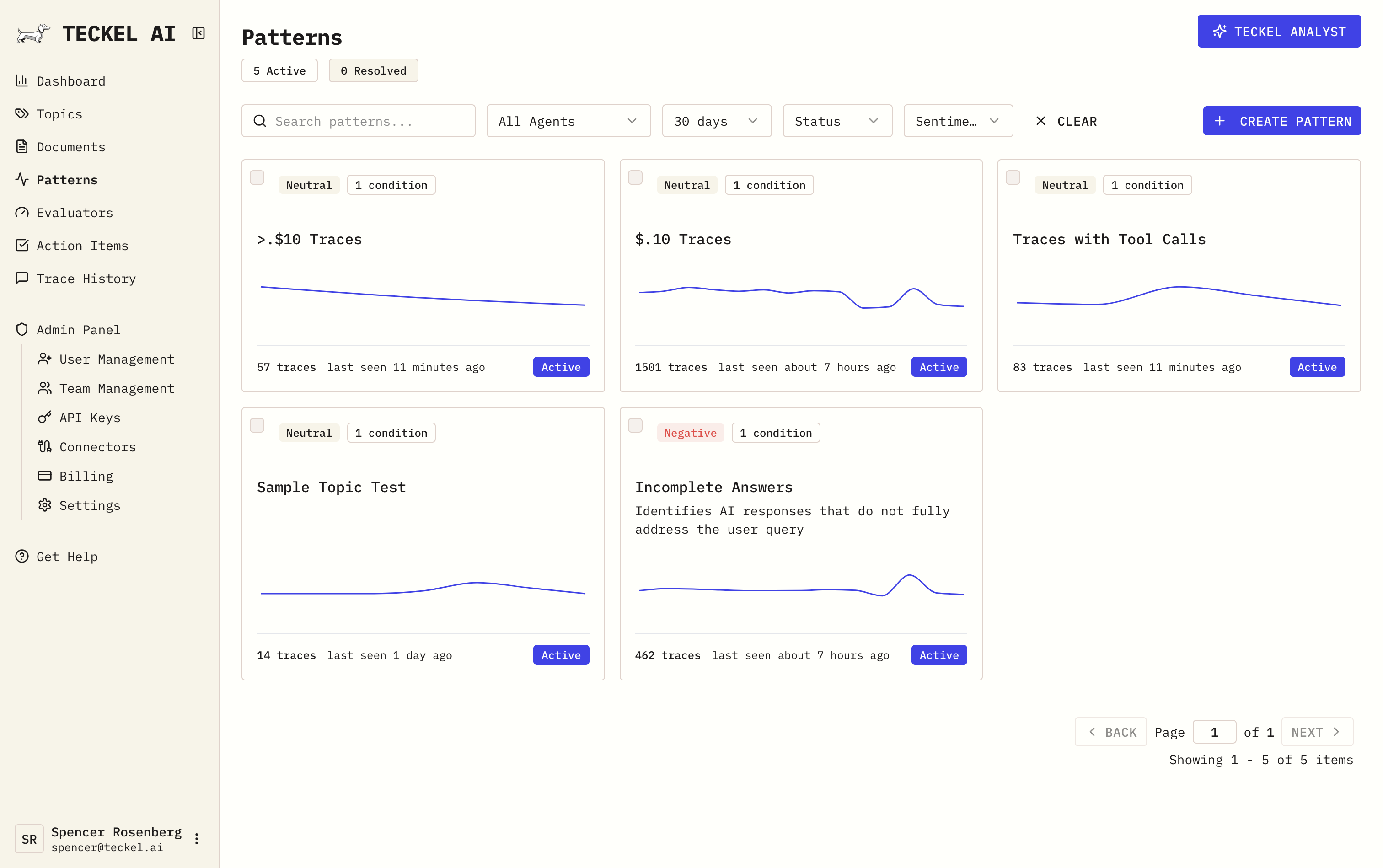

Patterns

Sentry-like issue detection for AI systems, made up of LLM-as-a-Judge evaluations and deterministic system analytics.

Creating Patterns

Define conditions that identify problematic traces:

Evaluator conditions

Completeness < 0.7

Accuracy < 0.8

"Contains Disclaimer" = false

Metric conditions

Cost > $0.50

Latency > 5000ms

Output tokens > 2000

Combining conditions (AND/OR):

(Completeness < 0.7) AND

(Latency > 5000ms)

Example Patterns

| Name | Conditions | Purpose |

|---|---|---|

| Billing issues | Completeness < 0.7 AND Topic = "Billing" | Catch poor billing answers () |

| High cost | Cost > $0.50 | Flag expensive traces |

| Compliance | "Contains Disclaimer" = false AND Topic = "Medical" | Ensure disclaimers |

| Slow responses | Latency > 5000ms | Monitor performance |

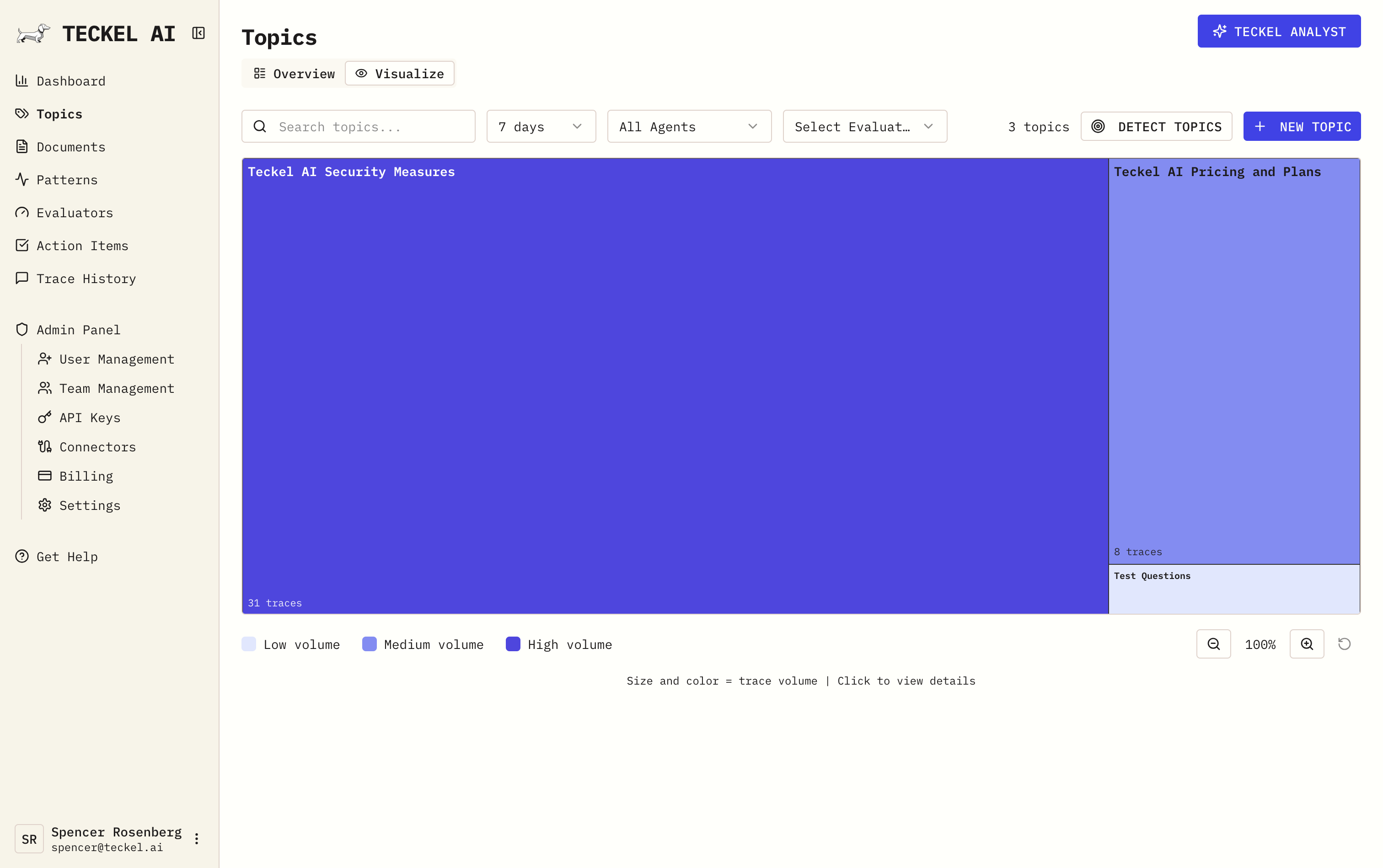

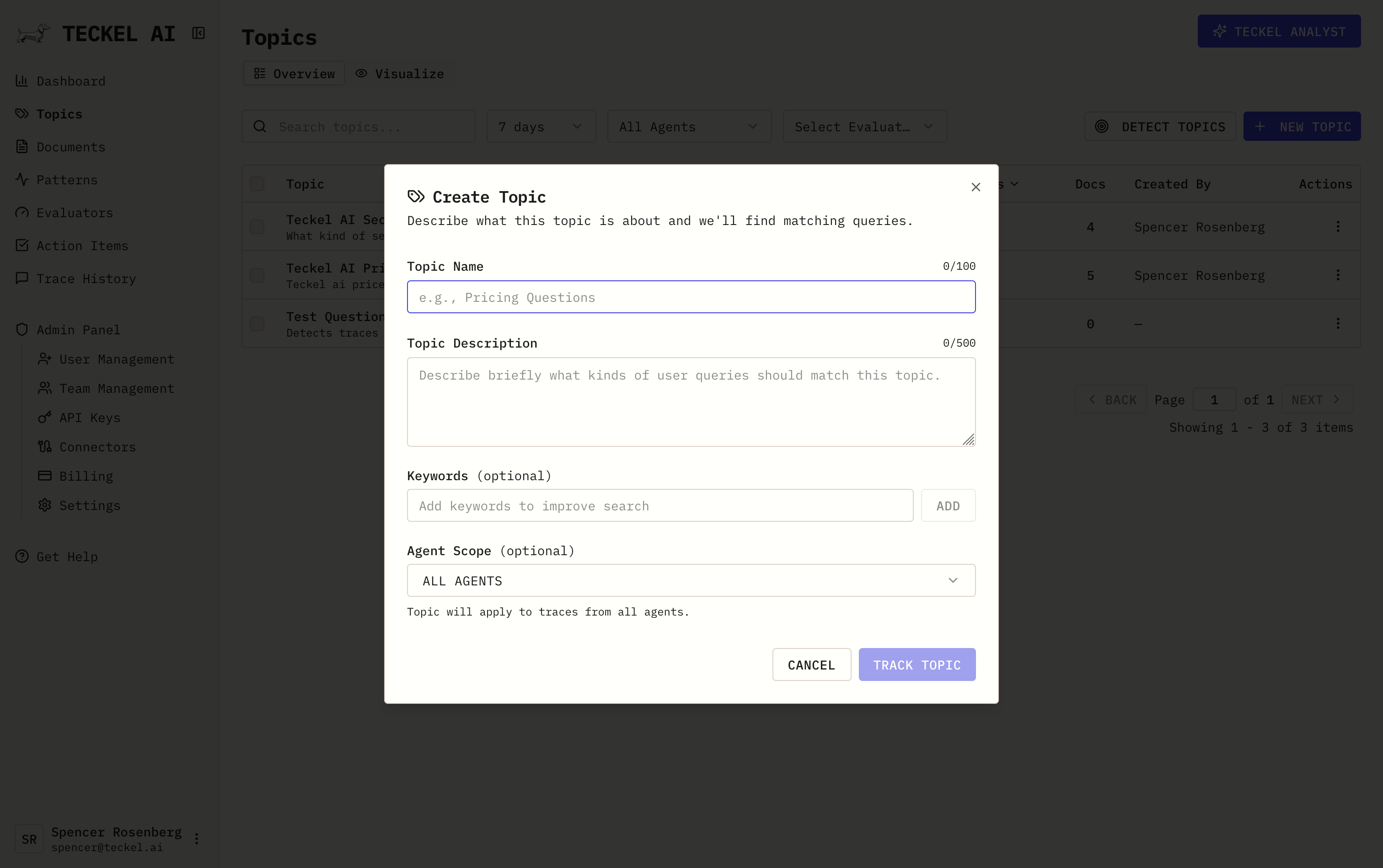

Topics

Organize traces into meaningful categories, using very cheap to run custom trained small AI models.

Train a Topic

Label examples to train a small model:

- Add positive examples (should match)

- Add negative examples (should not match)

- Custom classifier improves with more examples

Best for: Known categories, high-precision needs, business-critical areas to monitor

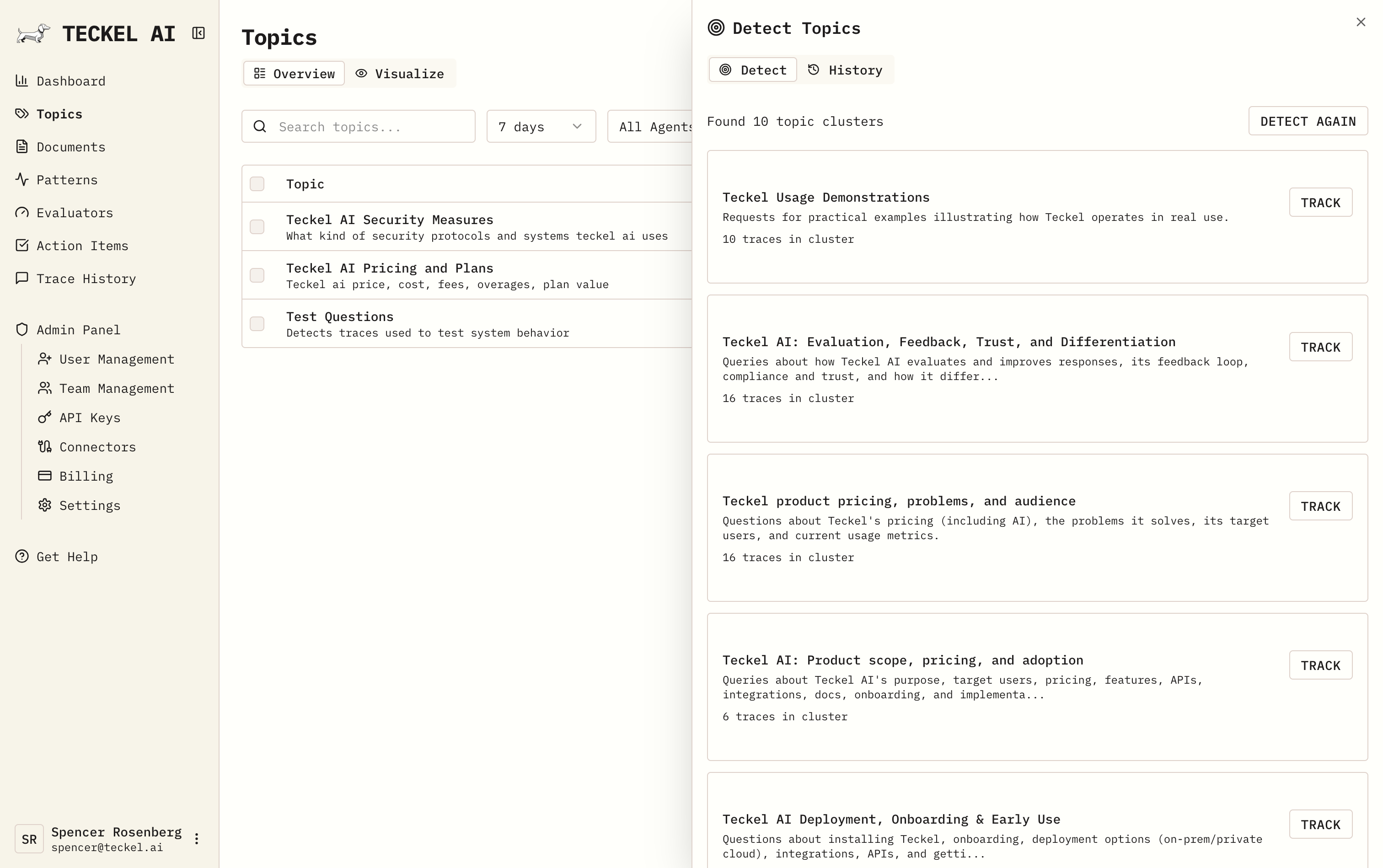

Auto-Detect Topics

Let our advanced clustering algorithm find emerging patterns:

- Analyzes traces for semantic similarity

- Suggests topic names

- Review and accept to start tracking

Best for: Discovering unknown patterns, finding new areas to consider tracking over time

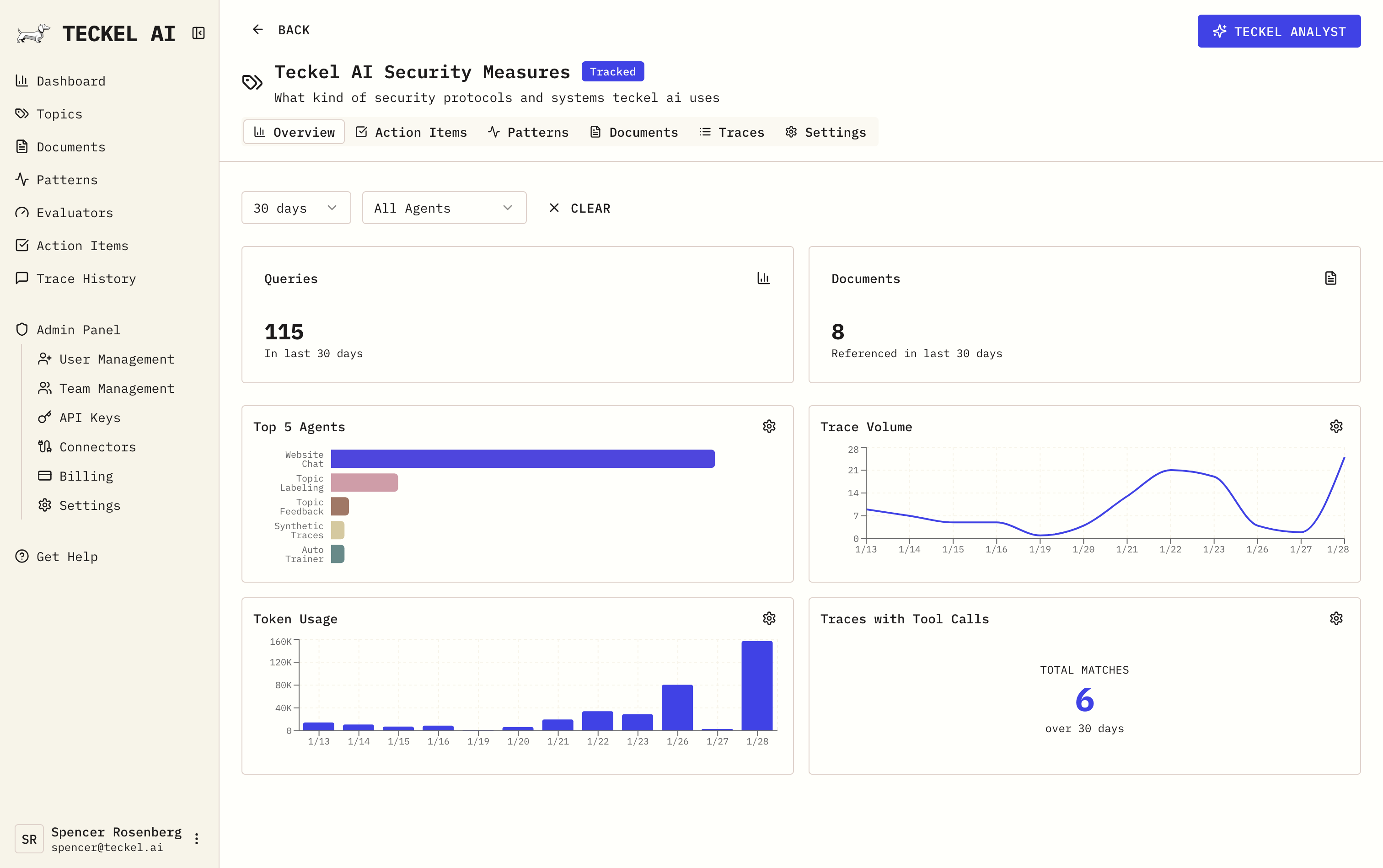

Topic Detail

- Aggregate metrics and trends across given topic areas

- Teckel Analyst provides feedback and action items on demand

- List of matching traces, sortable, groupable, and searchable

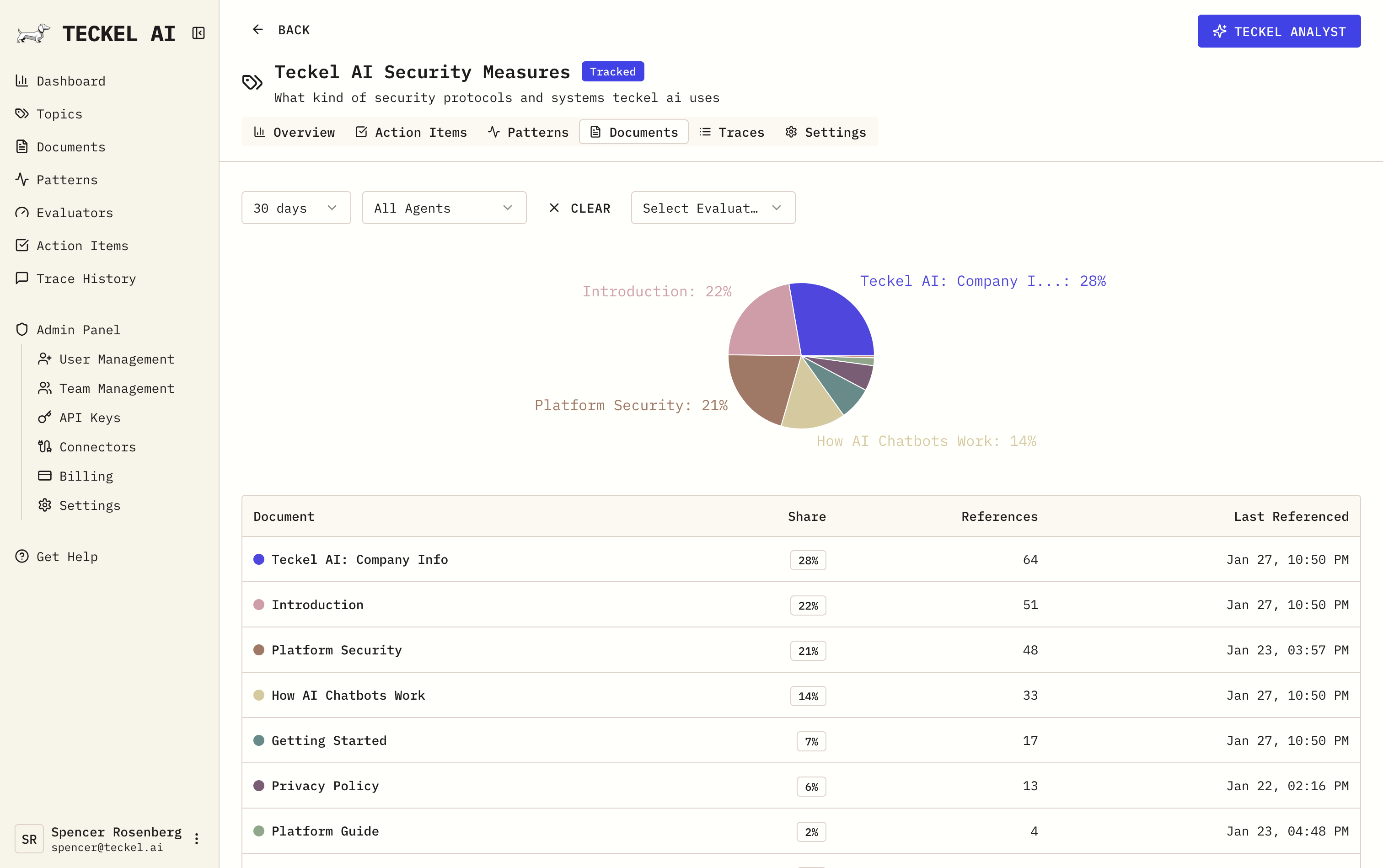

- Documents frequently retrieved for this topic

- Document retrieval and performance within topic

- Detect and find knowledge gaps across your RAG system

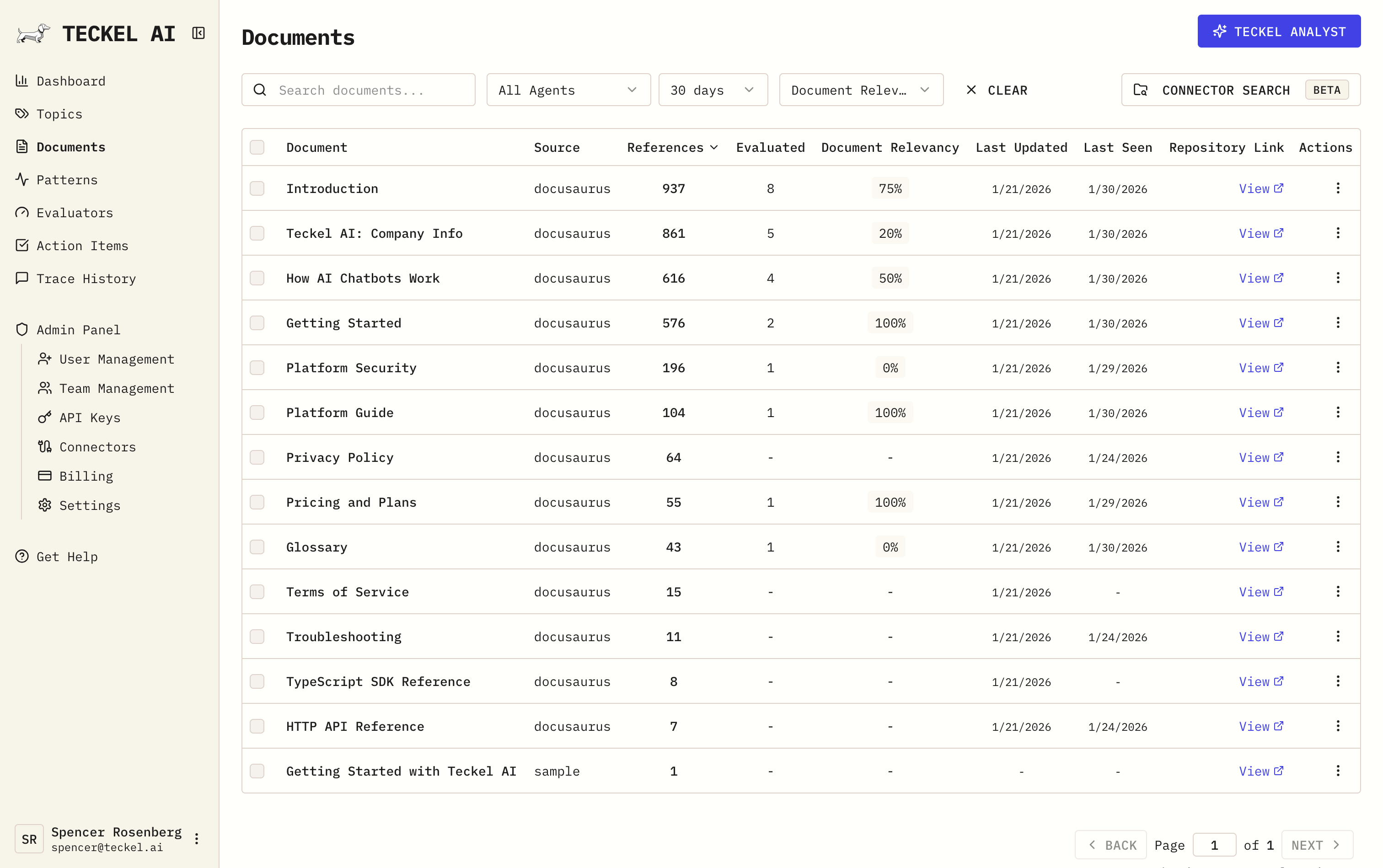

Documents

Track knowledge base health and review external sources your AI is learning and pulling information from.

Document list:

- All documents appearing in traces

- Performance and utilization metrics per document

- Metadata including source freshness, ownership, intranet URL, and more

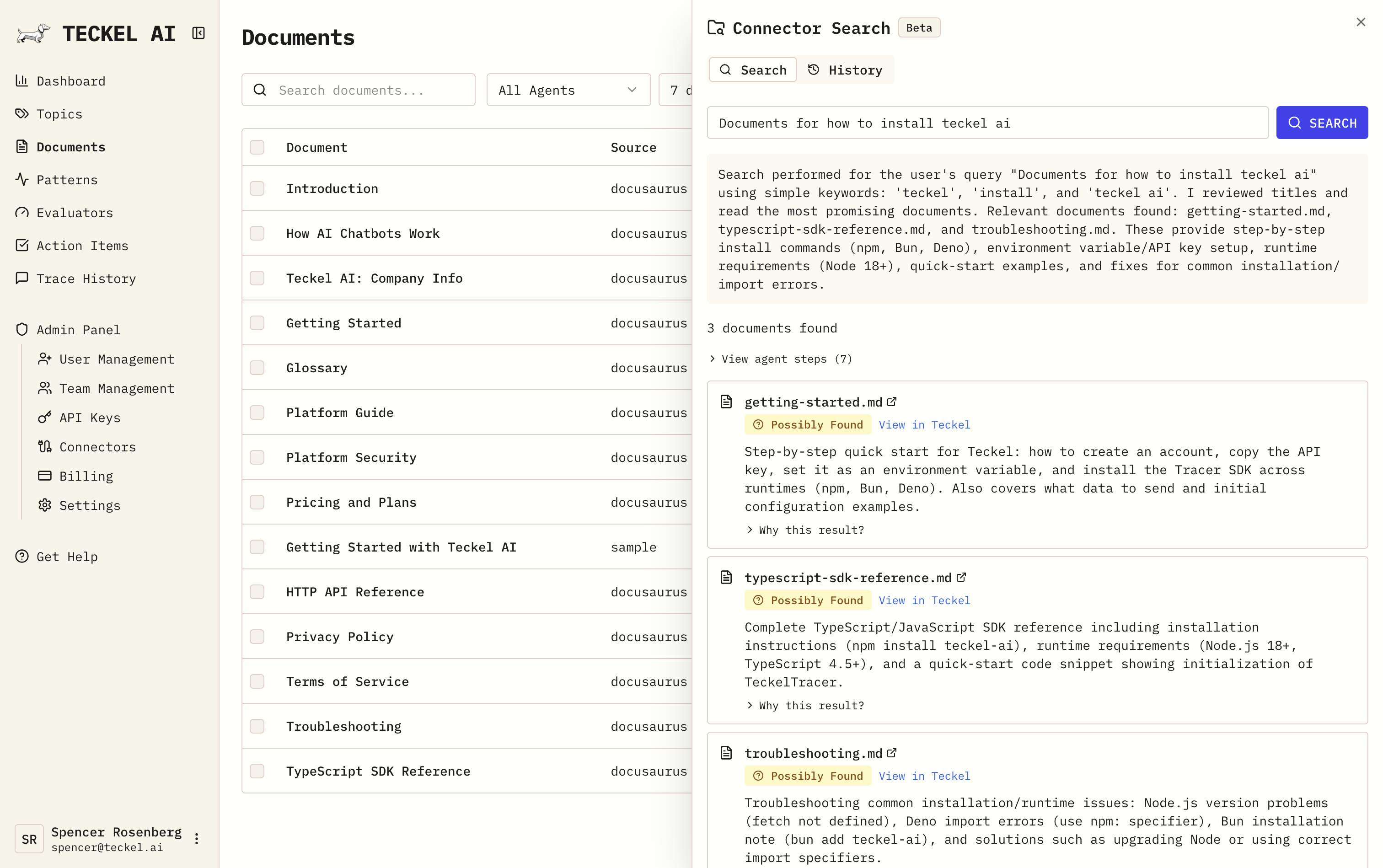

Connectors

- Google Drive: Search your Drive when to determine if relevant documents exist or not and if they are indexed in Teckel or not, helps find if your AI is having retrieval issues with RAG

- Configure for your org in Admin Panel —> Connectors

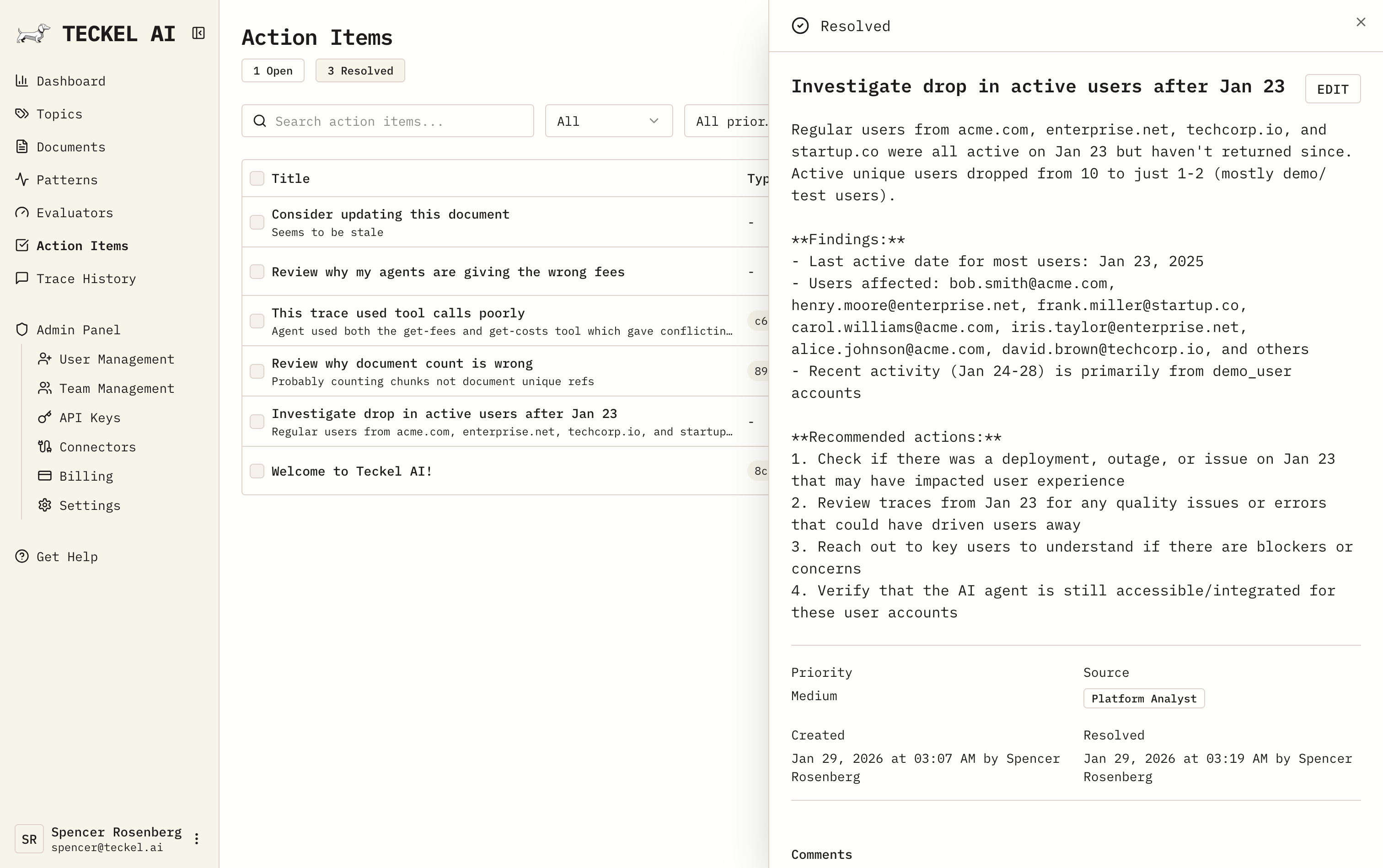

Action Items

Tasks to improve your AI system, prioritized by impact.

Action Items allows for team collaboration and creation of tickets as it relates to problems found in your AI agents. Users can create an action item manually, or ask the Teckel Analyst when working on a problem to flag an identified issue and it will write it up for you.

Workflow:

- Review action items sorted by priority

- Click through to see supporting traces

- Implement the fix (update docs, adjust prompts, etc.)

- Mark as resolved

- Watch related pattern/evaluator scores improve

Action items create a direct path from "something is wrong" to "here's what to fix."

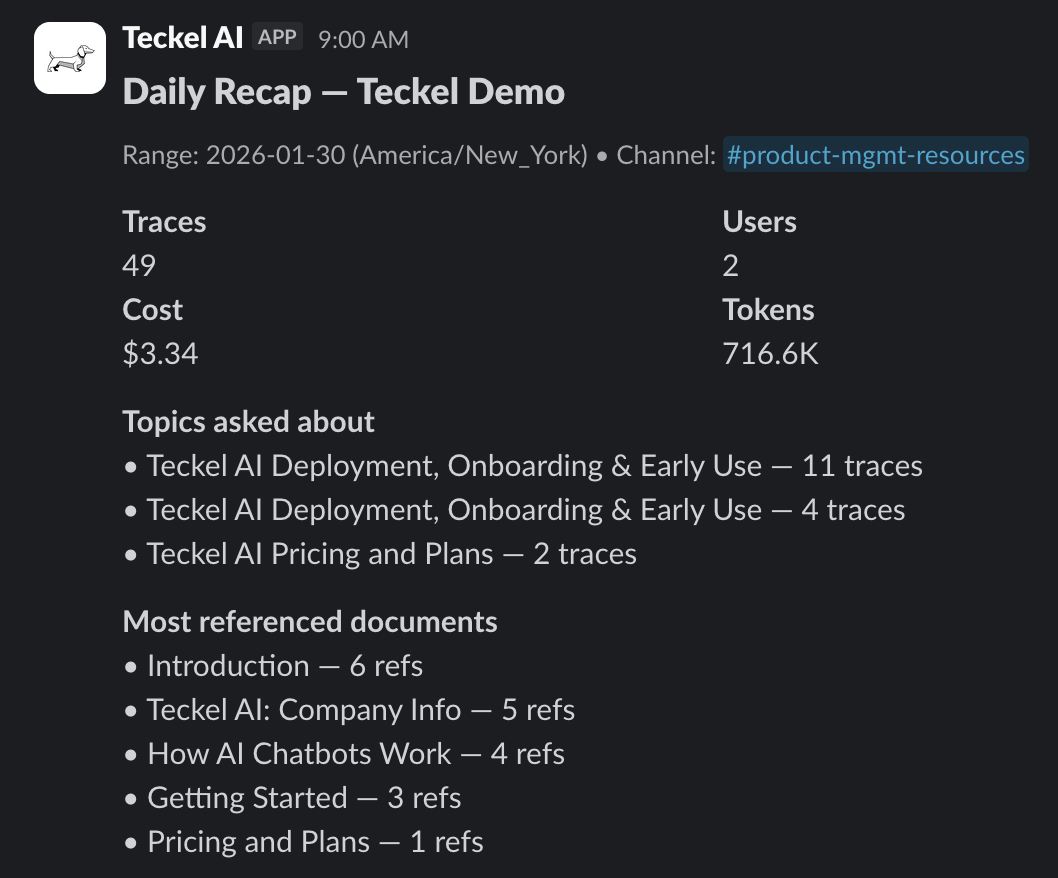

Slack

Daily system recaps sent to you via Slack bot.

Get a scheduled shared recap of top topics, patterns, agents, cited documents, models used, and daily cost shared directly to your Slack channel of choice. More to come including automated alerting for detected regressions across topics and patterns and proactive Teckel Analyst feedback on identified issues.

Teckel CLI/MCP (Coming Soon)

The Teckel analyst, directly in your terminal. Pull logs, traces, and issues without leaving your editor, then fix them immediately with your code agents.

What it does:

- Instant access to issues: Give your code agent CLI access, ask “what's broken?" and get actionable answers with trace evidence in context

- Direct to fix: Identify an issue, then hand it off to your code agent (Claude Code, Cursor, etc.) to implement the fix in the same session

- Cost visibility: Monitor spend across models and time periods wherever you’d like to

Workflow:

- Run

teckelin your terminal - Ask: "What are the top issues this week?"

- Drill into a specific pattern or trace

- Pass context directly to your code agent: “Identify why this agent is triple calling the same tool"

- Ship the fix without context-switching

Why CLI?

- Seamless handoff to code agents for immediate fixes

- Scriptable for CI/CD and automated monitoring

- Honestly who isn’t burnt out from reading dashboards

Stay tuned for release announcement soon.

Workflow

Typical monitoring workflow:

- Set up evaluators for metrics you care about

- Create patterns combining evaluator + topic conditions

- Train topic classifiers for important business areas

- Act on Teckel Analyst feedback to fix identified issues

- Watch patterns resolve as improvements take effect

Teckel AI provides this as a continuous improvement loop: detect → understand → fix → track for regressions.