How AI Chatbots Use Your Company's Knowledge

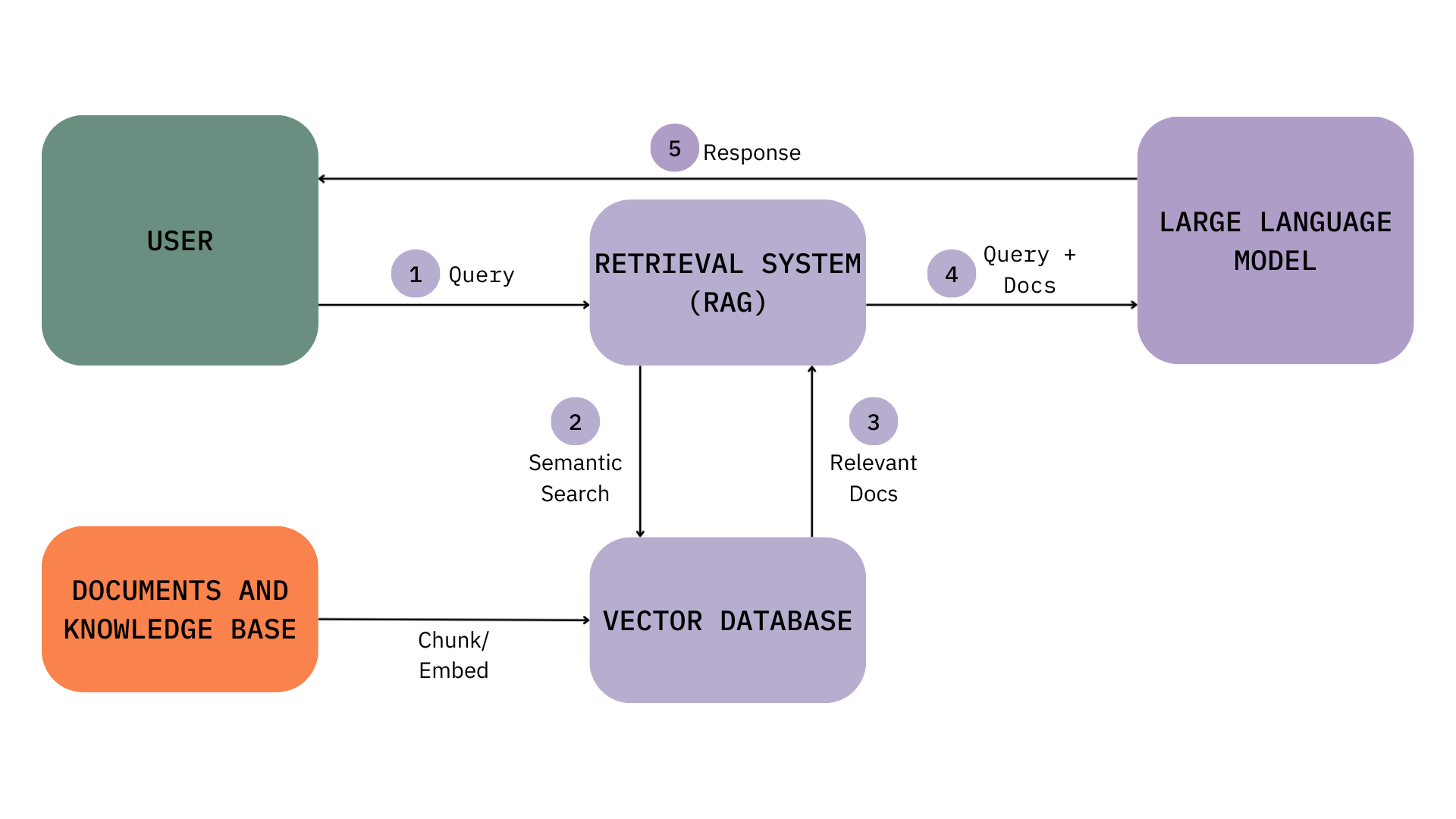

Modern AI agents and chat systems read information from your documents, retrieving information company knowledge base to understand your policies and answer questions. The common approach for this process is called RAG (Retrieval Augmented Generation), and understanding how it works helps explain why your agents sometimes gives magical answers and sometimes completely fail at basic tasks.

How RAG Works Step by Step

1. User Asks a Question Someone types "What's the return policy for this item?" into a customer support chatbot.

2. Semantic Search The system converts that question into a mathematical representation of meaning and searches your knowledge base for similar content. It uses semantic similarity not just keyword search, so "refund process" and "return policy" are recognized as related.

3. Relevant Documents Retrieved The search returns chunks of your documents that seem most relevant, maybe sections from your returns guide, shipping policy, or FAQ.

4. Query + Documents Sent to AI The AI model receives both the original question AND those document chunks as context.

5. AI Generates Response The AI writes an answer based on what it found in your documents.

Where Things Go Wrong

This system works well when:

- Your documents contain the information users need

- The search system finds the right documents

- The AI correctly interprets and cites the content

But real life problems emerge due to:

- Missing information: Users ask about topics you haven't documented

- Outdated content: Old policies get cited because nobody updated them

- Poor retrieval: The right document exists but doesn't get found

- Hallucinations: The AI returns output that isn't supported in the source documents

The challenge is that you often don't know which problem you have. Is the AI making things up? Is your documentation incomplete? Is the search broken? Without visibility, you're guessing.

Why AI Systems Need Observability

Traditional software has mature observability: metrics, logs, traces, and alerts. When something breaks, you know. AI systems often lack this infrastructure entirely.

What traditional observability gives you:

- Know when things fail (error rates, latency spikes)

- Understand why they failed (logs, stack traces)

- Track trends over time (metrics dashboards)

- Get alerted before users complain (anomaly detection)

What AI systems typically lack:

- No clear definition of "failure" (bad answers aren't exceptions)

- No structured way to measure quality at scale

- No automatic categorization of what users ask

- No systematic way to identify patterns in failures

This is why AI teams often rely on user complaints to find problems. By the time someone reports an issue, many others have already experienced it.

Types of AI Observability

Different approaches to monitoring AI systems:

Trace logging Record every interaction: query, response, latency, tokens, cost. Essential foundation, but doesn't tell you if answers are good.

LLM-as-a-judge evaluation Use another LLM to score responses on dimensions like completeness, accuracy, or tone. Flexible but requires careful prompt engineering.

Classification and topic detection Categorize queries to understand what users ask about. Helps identify trends and measure performance by category.

Pattern detection Alert when specific conditions are met: low scores on certain topics, cost spikes, latency issues. Moves from reactive to proactive monitoring.

Document/retrieval analysis Track which documents get retrieved and whether they help or hurt. Identifies content gaps and retrieval problems.

Teckel's Approach

Teckel combines these approaches into a unified platform:

- Evaluators: Define custom metrics using LLM-as-a-judge or code-based rules

- Topics: Train classifiers or auto-detect emerging themes in your queries

- Patterns: Set conditions that trigger alerts when issues arise

- Documents: Connect knowledge sources to verify coverage and identify gaps

The goal is systematic visibility into your AI system, not just logging traces but understanding what's working and what isn't.

Do I Need RAG to Use Teckel?

Teckel monitors any AI system that generates responses, not just RAG. If your company has:

- An AI system reliant on MCP servers to pull information

- A customer support bot with a Moby Dick length system prompt

- Any agentic application that uses tool calls

Then Teckel can show you how it's performing. Without retrieval data, some features (like document analytics) won't apply, but evaluators, topics, and patterns work on any AI output.

AI Agents and Multi-Step Workflows

Beyond RAG, newer AI systems often act as agents that:

- Use tools: Search the web, query databases, call APIs, execute code

- Make decisions: Choose which tools to use based on context

- Execute multi-step plans: Break complex tasks into sequential operations

These agentic workflows create different observability challenges:

- Tool failures: Did the right tool get called? Did it return useful data?

- Decision quality: Was the agent's reasoning sound?

- Workflow efficiency: How many steps did it take? Where are bottlenecks?

Teckel captures agent workflows as spans within traces with OTel support, giving you visibility into each step of execution and the agents thought processes. Combined with evaluators and patterns, you can detect when agents go off-track and identify systematic issues across your fleet.

Next Steps

- Get Started with integration

- Explore the Platform Guide for complete feature reference